November 23, 2016

Unplugged: What I learned about people and technology while writing QViewer

This post is not about BI technology or vendors or data analysis. "Unplugged" is a new kind of articles in this blog. It's my personal observations with a bit of philosophical contemplation, if you will. Today I'm writing about what I've learned while developing and selling QViewer -- a side project started as a "quick and dirty" tool for personal use which then became an interesting business experiment that taught me a few new things about people and technology:

You can make and sell things as your side project. It's doable. I remember that somewhat awkward feeling when I received first ever payment for software that I made myself. It was very unusual. I had experience of selling enterprise software with a 6-digit price tag, but that was someone else's business. Getting your own first sale in a $50 range was no less exciting if not more.

People in general are good. Once you start selling software you interact with people all over the world. And it turns out that people are generally good around the globe. I was surprised how many very grateful and positive people there is. Probably it's the most unexpected and gratifying outcome of the whole project.

Some people cheat with licenses. Despite the fact that the cheapest QViewer license costs less than a dinner for two, and, unlike the dinner, is acquired forever -- they still cheat. I understand it's a part of the human nature -- feeling frustration and pity when someone steals from you and at the same time enjoying the benefits of stealing from someone else even if it's just pocket money. People are complicated animals. So I'm not saying anything about the people that cheat. I'm deeply content that the majority is honest. The humanity definitely has a chance to survive :)

Some people are strange. No need to deal with them. After all, doing business is a two-way street. I remember one person demanded a sales presentation, a webex demonstration and sending him a detailed commercial proposal for QViewer because he and "his guys" wanted to evaluate whether it's worth spending couple hundred dollars. I replied that I'm ready to answer any specific questions, and offered to try the free QViewer to get an idea about the product. I've never heard from him again.

95% of technical support time is spent on 5% of customers. Some people are just like that -- they don't read instructions, forget things, don't check spam folders before complaining that their key didn't arrive, can't figure out what instance of QViewer they're launching, etc. It's OK, they just need more help. After all, adults are just grown up kids.

User recommendations is the best advertisement. So far I've spent exactly $0 for advertising QViewer. Yet, it's quite popular, mostly because of user recommendations. For me it was a good example of what it looks like when you made something useful. If people recommend it to each other -- you're on the right path.

1 out 10 orders never paid. Spontaneous decisions, no problem.

Payment reminders work. Sometimes, your invoice sent to a customer may be buried in his/her email box under a pile of unread messages. Sending a friendly reminder once might help. Just once, that's enough for those who are really looking to buy.

Even small side projects can be extremely good for career opportunities. Needless to say, mentioning QViewer in my CV helped me tremendously in finding new employers (when I looked for them). I would argue that the salary increase it enabled has earned me more than selling QViewer licenses alone.

Developer tools are amazing nowadays. I wrote my first program in 1986. It was in BASIC on a military-grade DEC-type computer. In 90s I wrote programs in C++, Pascal and Assembly. Between 1998 and 2011 I didn't write a single line of code (except some Excel macros). Boy, how things have changed since then. When I started writing QViewer in 2012 I was totally fascinated with the capabilities of Visual Studio and C#. Later I fell in love with F# but that's a different story. And thanks God we have StackOverflow. Writing software has never been easier.

Obfuscate and protect your software. Sooner or later someone will try to disassemble your software for a purpose that might be disappointing for you. There is no absolute protection, but raising the barrier can significantly complicate the task. Once I interviewed a developer for EasyMorph. Trying to impress me, the guy told me that he also wrote a QVD viewer. However, after not answering a few questions about the QVD format he quickly admitted that he just disassembled and repacked some components of QViewer. I learned a lesson that day.

Writing and selling software changed my perception of the software industry. I understood what it takes to create it. I stopped using any pirated programs. Now I use only licensed software, even if it's rather expensive (I'm looking at you, Microsoft), and I always donate when a program is available for free but donations are accepted.

November 21, 2016

A simple join test that many fail

From time to time I happen to interview BI developers and I noticed that many of them don't understand how joins work. Probably, because most of the time they used to work with normalized data in transactional systems where primary keys always exist and defined by database design. In order to do figure out if the candidate has basic understanding of joins I ask him/her to answer the question below (without executing any actual query):

Hint: the correct answer is not 4. If you're unsure whether your answer is correct see this short video where both tables are joined using EasyMorph: https://www.youtube.com/watch?v=RYCtoRTEk84, or check our this SQLFiddle: http://sqlfiddle.com/#!9/60011/11/0

Not understanding joins sooner or later leads to uncontrolled data duplication in cases where joined tables are denormalized, which is a frequent cause of miscalculations in analytical applications.

UPDATE: Added a link to SQLFiddle (kudos to Devon Guerro).

Hint: the correct answer is not 4. If you're unsure whether your answer is correct see this short video where both tables are joined using EasyMorph: https://www.youtube.com/watch?v=RYCtoRTEk84, or check our this SQLFiddle: http://sqlfiddle.com/#!9/60011/11/0

Not understanding joins sooner or later leads to uncontrolled data duplication in cases where joined tables are denormalized, which is a frequent cause of miscalculations in analytical applications.

UPDATE: Added a link to SQLFiddle (kudos to Devon Guerro).

November 15, 2016

Now we know where Tableau is heading. Where is Qlik going?

During the recent conference Tableau has unveiled its three-year roadmap. Briefly, it includes:

Project Maestro is another inevitable move from Tableau people who now realize that self-service data analysis requires self-service data transformation. Tableau is still reluctant building a fully-featured ETL for business users like EasyMorph, however once Project Maestro is implemented the advantage of having built-in ETL capabilities in Qlik would be diminished (but not dismissed).

Now, when Tableau has clear advantage on the data visualization side and stops being a fancy add-on to databases but becomes more and more a self-contained analytical platform, the question is -- where is Qlik going?

QlikView is not actively developed anymore. All the recent developments on the Qlik Sense side in 90% cases are focused on expanding API capabilities, while its data visualization capabilities remain frugal. Honestly, I don't understand this development logic. I would understand it, if Qlik's product strategy assumed heavy reliance on 3rd party tools for decent data visualization and analysis. However so far I struggle to see any high-quality 3rd party tools built on top of Qlik Sense API that can amend the built-in visualizations. Qlik Market might have a few interesting extensions, but they're typically very specialized. Qlik Branch lacks high-quality extensions and is full of no longer supported experimental projects. Qlik itself doesn't promote any 3rd party tools and its product roadmap is yet to be seen.

So where is Qlik going?

- High-performance in-memory engine based on Hyper (in the timeframe that I predicted earlier)

- Enhanced data preparation capabilities (Project Maestro)

- Built-in data governance

- Pro-active automatically designed visualizations

- Tableau Server for Linux

Project Maestro is another inevitable move from Tableau people who now realize that self-service data analysis requires self-service data transformation. Tableau is still reluctant building a fully-featured ETL for business users like EasyMorph, however once Project Maestro is implemented the advantage of having built-in ETL capabilities in Qlik would be diminished (but not dismissed).

Now, when Tableau has clear advantage on the data visualization side and stops being a fancy add-on to databases but becomes more and more a self-contained analytical platform, the question is -- where is Qlik going?

QlikView is not actively developed anymore. All the recent developments on the Qlik Sense side in 90% cases are focused on expanding API capabilities, while its data visualization capabilities remain frugal. Honestly, I don't understand this development logic. I would understand it, if Qlik's product strategy assumed heavy reliance on 3rd party tools for decent data visualization and analysis. However so far I struggle to see any high-quality 3rd party tools built on top of Qlik Sense API that can amend the built-in visualizations. Qlik Market might have a few interesting extensions, but they're typically very specialized. Qlik Branch lacks high-quality extensions and is full of no longer supported experimental projects. Qlik itself doesn't promote any 3rd party tools and its product roadmap is yet to be seen.

So where is Qlik going?

Labels:

Qlik,

Qlik Sense,

Tableau

September 4, 2016

How to use the memstat metrics in QViewer

Starting from version 3.1 QViewer shows two memstat metrics in Table Metadata window:

These metrics are calculated similarly to memstat data available in QlikView (not available in Qlik Sense so far). Since the structure of QVD files is very close to the internal in-memory data format in Qlik these metrics can be used to optimize (reduce) memory footprint of resident tables which can be desirable for particularly large applications. The most convenient way to inspect resident tables in QViewer is setup a simple generic subroutine as described here. Alternatively, you can insert temporary STORE statements to save resident tables into QVDs and then open them in QViewer manually.

When looking at the memstat metrics in QViewer you would typically want to identify columns that take most of space (hint: click column headers in Table Metadata to sort the grid). A few things that you can do reduce table size:

Remarks

Qlik uses special format for columns with incrementally increasing integers (autonumbers) -- they basically don't take up any space in memory, although QVDs store them as regular columns.

The memstat metrics are calculated correctly even if QVDs are partially loaded in QViewer. Therefore you can use them in the free version of QViewer, or when partial loading was used.

QVDs generated in Qlik Sense v.2 and above are compatible with QVDs generated in QlikView and therefore can be opened in QViewer as well.

The total size is NOT simply the avg. symbol size multiplied by # of rows -- it's calculated using a more complicated logic that accounts data compression.

- Size, bytes -- total size of the column in bytes

- Avg.bytes per symbol -- average size of column values in byte

|

| click to zoom |

These metrics are calculated similarly to memstat data available in QlikView (not available in Qlik Sense so far). Since the structure of QVD files is very close to the internal in-memory data format in Qlik these metrics can be used to optimize (reduce) memory footprint of resident tables which can be desirable for particularly large applications. The most convenient way to inspect resident tables in QViewer is setup a simple generic subroutine as described here. Alternatively, you can insert temporary STORE statements to save resident tables into QVDs and then open them in QViewer manually.

When looking at the memstat metrics in QViewer you would typically want to identify columns that take most of space (hint: click column headers in Table Metadata to sort the grid). A few things that you can do reduce table size:

- Remove unnecessary columns that take a lot of space

- Force Qlik to convert duals to numbers by multiplying them by 1

- Trim text values to remove trailing and leading spaces

- Use integers instead of floats where possible

- Round up floats to fewer decimal digits to have fewer distinct values in the column

- Use autonumbers instead of long composite text keys

Remarks

Qlik uses special format for columns with incrementally increasing integers (autonumbers) -- they basically don't take up any space in memory, although QVDs store them as regular columns.

The memstat metrics are calculated correctly even if QVDs are partially loaded in QViewer. Therefore you can use them in the free version of QViewer, or when partial loading was used.

QVDs generated in Qlik Sense v.2 and above are compatible with QVDs generated in QlikView and therefore can be opened in QViewer as well.

The total size is NOT simply the avg. symbol size multiplied by # of rows -- it's calculated using a more complicated logic that accounts data compression.

Labels:

Qlik Sense,

QlikView,

QViewer

August 1, 2016

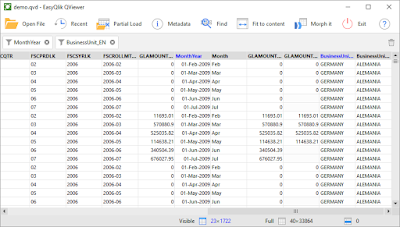

QViewer v3: Qlik-style filtering and full table phonetic search

New major version of QViewer is out. You can download it here: http://easyqlik.com/download.html

Here is what's new and exciting about it:

The headline feature of the new version is the ability to filter tables in the Qlik fashion using listboxes. Applied filters can be seen in the filter bar that appears above the table (see screenshot above).

When a selection is made, value counters update automatically. The green bar charts behind the counters hint at current selection count vs total count ratio.

Note that nulls are also selectable.

You can view additional metadata of a value in question using Cell Metadata floating window. This is particularly helpful for easy detection of leading and trailing spaces in text values.

Here is what's new and exciting about it:

Qlik-style filtering

|

| click to zoom |

The headline feature of the new version is the ability to filter tables in the Qlik fashion using listboxes. Applied filters can be seen in the filter bar that appears above the table (see screenshot above).

When a selection is made, value counters update automatically. The green bar charts behind the counters hint at current selection count vs total count ratio.

Note that nulls are also selectable.

Full table search

It is now possible to search for a value in entire table. Current selection becomes limited to only rows where the searched value is found. Full table search is basically another kind of filter. When it's applied, it can be seen in the filter bar.Phonetic search mode

The full-table search and the listboxes allow looking up values by matching them phonetically. This is helpful when you don't know for sure the spelling of a word in question. For instance, if you search for "Acord" values "Accord", "Akord", "Akkort" will match. Phonetic search works for whole words only. Currently, only English phonetic matching is supported.Cell metadata

You can view additional metadata of a value in question using Cell Metadata floating window. This is particularly helpful for easy detection of leading and trailing spaces in text values.

New license key format

The new version requires upgrading license keys. All license keys purchased after 1st of August, 2015 will be upgraded for free. License keys purchased prior to that date are upgraded at 50% of the current regular price. To upgrade a license key please send me your old key and indicate the email address it is linked to.July 9, 2016

The three most important habits of a data analyst

I've been doing data analysis for almost 15 years -- mostly using Excel and Business Intelligence tools. And from the very first year I believe that accuracy is the biggest challenge for a data analyst. Accuracy is fundamental because if a calculation result is incorrect then everything else that is based on it -- visualizations, judgements and conclusions, become irrelevant and worthless. Even performance is not so important, because sometimes you can solve a performance problem by throwing in more hardware, but that would never fix incorrect calculation logic.

Ensuring accuracy is probably the most important skill a data analyst should master. To me, striving for accuracy is a mental discipline developed as a result of constant self-training, rather than something that can be learned overnight. There are three practical habits to develop this skill:

1) Sanity checks. These are quick litmus tests that allow detecting grave errors on early stages. After you get a calculation result for the first time, ask yourself -- does it make sense? Does the order of magnitude look sane? If it's a share (percentage) of something else -- is it reasonably big/small? If it's a list of items -- is it reasonably long/short? Sounds like a no-brainer but people tend to skip sanity checks frequently.

2) Full assumption testing. In my experience this habit is most overlooked by beginner analysts. Assumptions should not be opinions, they must be verified facts. "We're told that field A has unique keys" -- verify it by trying to find duplicate values in it. "Field B has no nulls" -- again, verify it by counting nulls or check data model constraints (where applicable). "They said that Gender is encoded with M and F" -- verify it by counting distinct values in field Gender. Whatever assumptions are used for filtering, joining or calculation -- absolutely all of them must be tested and confirmed prior to doing anything else. Once you develop this habit you would be surprised how often assumptions turn out to be wrong. A good data analyst can spend a few days to verify assumptions before even starting analyzing data itself. Sometimes assumptions are implicit -- e.g. when we compare two text fields we usually implicitly assume that neither of if has special symbols or trailing spaces. A good data samurai is able tosee invisible recognize implicit assumptions and test them explicitly.

3) Double calculation. This habit is sometimes overlooked by even experienced analysts. Probably because it requires sometimes rather tedious effort. This habit is about creating alternative calculations, often created in a different tool -- typically Excel. The point is to test the core logic, therefore such alternative calculation can include only a subset of original data and do not cover minor cases. The results achieved using alternative calculation should be equal to results of the main calculation logic, regardless whether it's done in SQL or some BI/ETL tool.

Let the Accuracy be with you.

Ensuring accuracy is probably the most important skill a data analyst should master. To me, striving for accuracy is a mental discipline developed as a result of constant self-training, rather than something that can be learned overnight. There are three practical habits to develop this skill:

1) Sanity checks. These are quick litmus tests that allow detecting grave errors on early stages. After you get a calculation result for the first time, ask yourself -- does it make sense? Does the order of magnitude look sane? If it's a share (percentage) of something else -- is it reasonably big/small? If it's a list of items -- is it reasonably long/short? Sounds like a no-brainer but people tend to skip sanity checks frequently.

2) Full assumption testing. In my experience this habit is most overlooked by beginner analysts. Assumptions should not be opinions, they must be verified facts. "We're told that field A has unique keys" -- verify it by trying to find duplicate values in it. "Field B has no nulls" -- again, verify it by counting nulls or check data model constraints (where applicable). "They said that Gender is encoded with M and F" -- verify it by counting distinct values in field Gender. Whatever assumptions are used for filtering, joining or calculation -- absolutely all of them must be tested and confirmed prior to doing anything else. Once you develop this habit you would be surprised how often assumptions turn out to be wrong. A good data analyst can spend a few days to verify assumptions before even starting analyzing data itself. Sometimes assumptions are implicit -- e.g. when we compare two text fields we usually implicitly assume that neither of if has special symbols or trailing spaces. A good data samurai is able to

3) Double calculation. This habit is sometimes overlooked by even experienced analysts. Probably because it requires sometimes rather tedious effort. This habit is about creating alternative calculations, often created in a different tool -- typically Excel. The point is to test the core logic, therefore such alternative calculation can include only a subset of original data and do not cover minor cases. The results achieved using alternative calculation should be equal to results of the main calculation logic, regardless whether it's done in SQL or some BI/ETL tool.

Let the Accuracy be with you.

July 5, 2016

Columnar in-memory ETL

Columnar databases are not exotic anymore. They're quite widespread and their benefits are well-known: data compression, high performance on analytical workloads, less demanding storage I/O throughput requirements. At the same time, ETL tools currently are still exclusively row-based, as they were 10 years ago or 20 years ago. Below, I'm describing a working principle of a columnar in-memory ETL [1], its differences in comparison with row-based ETL/ELT tools, and area of applicability (spoiler alert -- it's not just data transformation).

Incremental column-based transformations

Row-based ETL tools transform data row by row, when possible. For complex transformations, like aggregations, they build temporary data structures either in memory or on disks. This approach dictates a particular pattern -- row-based ETL tools strive to create as few temporary data structures as possible in order to reduce data redundancy and minimize number of full table scans, because they are very costly.

A column-based ETL tool, similarly to a columnar database, operates not with rows of uncompressed data, but with compressed columns (typically using vocabulary compression). Unlike a row-based ETL system, it can re-use datasets and transform compressed data directly (i.e. without decompression) and incrementally. Let me illustrate it with two examples: calculating a new column, and filtering a table:

1) Calculating a new column is simply adding a new column to existing table. Since a table is a set of columns, the result would be the original table (untransformed) + the newly calculated column. Note that in this case data is not moved -- we simply attach a new column to already existing table which is possible because our data is organized by columns, not by rows. In the case of a row-based ETL, we would have to update each row.

2) Filtering compressed columns can be done in two steps. Let's assume that we're using the vocabulary compression. In this case a column is represented as a combination of a vocabulary of unique entries, and a vector of pointers to the vocabulary entries (one pointer per row). Filtering can be done by marking selected vocabulary entries first, and then rebuilding the vector by removing pointers to not selected entries. Here, the benefit is double: we don't calculate a filtering condition (which is a heavy operation) for every row, but only for the vocabulary which is typically much shorter. Rebuilding the vector is a fast operation since it doesn't require calculating the filtering condition. Another benefit is that we don't have to rebuild vocabulary -- we can keep using the old vocabulary with the new vector, thus greatly reducing data redundancy.

In the examples above transformations are incremental -- i.e. new tables are obtained by incrementally modifying and re-using existing data.

Transformations like aggregation, sorting, unpivoting and some other can also be done by directly utilizing compressed data structure to a greater or lesser extent.

Incremental column-based transformation allows greatly reduced data redundancy, which brings us to the next topic:

In-memory transformations

Because of reduced redundancy and data compression, column-based ETL is a good candidate for in-memory processing. The obvious downside is, apparently, the limitation by RAM (which will be addressed below). The upsides of keeping all data in-memory are:

Storing all data in memory has a quite interesting and useful consequence, barely possible for row-based ETL tools:

Reactive transformations

Having all intermediate transformation results in memory lets us re-calculate transformations starting from any point, instead of running everything from the beginning as in the case with traditional ETL tools. For instance, in a chain of 20 transformations we can modify a formula in the 19th transformation and recalculate only last two transformations. Or last 5, if we decide so. Or last 7. If transformations are a non-linear graph-like workflow, we can intelligently recalculate only necessary transformations, respecting dependencies.

Effectively, it enables an Excel-like experience, where transformations are recalculated automatically when one of them changes, similarly to Excel formulas that are re-evaluated when another formula or a cell value changes.

This creates a whole new experience of working with data -- reactive, exploratory, self-service data manipulation.

Resume: a new kind of tool for data

The columnar representation allows incremental transformation of compressed data, which in turn makes it possible to greatly reduce redundancy (typical for row-based ETL tools), and keep entire datasets in memory. This, in turn, speeds up calculations and enables reactive, interactive data exploration and data analysis capabilities.

Columnar in-memory ETL is basically a new kind of hybrid technology in which there is no distinct borderline between data transformation and data analysis. Instead of slicing and dicing a pre-calculated data model like OLAP tools do, we get the ability to explore data by transforming it on the fly. At the same time it does the traditional ETL job, typical for row-based ETL utilities.

The RAM limitation still remains though. It can be partially mitigated with a data partitioning strategy, where a big dataset is sliced into parts which then are processed in parallel in a map/reduce fashion. In the long term, the Moore's law is still effective for RAM prices which benefits in-memory data processing in general.

All-in-all, while processing billions of rows is still more appropriate for row-based ETL tools than for columnar ones, the latter represent a new paradigm of mixed data transformation and analysis, which makes it especially relevant now, when public interest to self-service data transformation is growing.

[1] You can take a look at an example of a columnar in-memory ETL tool here: http://easymorph.com.

Incremental column-based transformations

Row-based ETL tools transform data row by row, when possible. For complex transformations, like aggregations, they build temporary data structures either in memory or on disks. This approach dictates a particular pattern -- row-based ETL tools strive to create as few temporary data structures as possible in order to reduce data redundancy and minimize number of full table scans, because they are very costly.

A column-based ETL tool, similarly to a columnar database, operates not with rows of uncompressed data, but with compressed columns (typically using vocabulary compression). Unlike a row-based ETL system, it can re-use datasets and transform compressed data directly (i.e. without decompression) and incrementally. Let me illustrate it with two examples: calculating a new column, and filtering a table:

1) Calculating a new column is simply adding a new column to existing table. Since a table is a set of columns, the result would be the original table (untransformed) + the newly calculated column. Note that in this case data is not moved -- we simply attach a new column to already existing table which is possible because our data is organized by columns, not by rows. In the case of a row-based ETL, we would have to update each row.

2) Filtering compressed columns can be done in two steps. Let's assume that we're using the vocabulary compression. In this case a column is represented as a combination of a vocabulary of unique entries, and a vector of pointers to the vocabulary entries (one pointer per row). Filtering can be done by marking selected vocabulary entries first, and then rebuilding the vector by removing pointers to not selected entries. Here, the benefit is double: we don't calculate a filtering condition (which is a heavy operation) for every row, but only for the vocabulary which is typically much shorter. Rebuilding the vector is a fast operation since it doesn't require calculating the filtering condition. Another benefit is that we don't have to rebuild vocabulary -- we can keep using the old vocabulary with the new vector, thus greatly reducing data redundancy.

In the examples above transformations are incremental -- i.e. new tables are obtained by incrementally modifying and re-using existing data.

Transformations like aggregation, sorting, unpivoting and some other can also be done by directly utilizing compressed data structure to a greater or lesser extent.

Incremental column-based transformation allows greatly reduced data redundancy, which brings us to the next topic:

In-memory transformations

Because of reduced redundancy and data compression, column-based ETL is a good candidate for in-memory processing. The obvious downside is, apparently, the limitation by RAM (which will be addressed below). The upsides of keeping all data in-memory are:

- Increased performance due to elimination of slow disk I/O operations.

- The ability to instantly view results of literally every transformation step without re-running transformations from the beginning. A columnar ETL effectively stores all results of transformations with a relatively little memory overhead, due to data compression and incremental logic.

Storing all data in memory has a quite interesting and useful consequence, barely possible for row-based ETL tools:

Reactive transformations

Having all intermediate transformation results in memory lets us re-calculate transformations starting from any point, instead of running everything from the beginning as in the case with traditional ETL tools. For instance, in a chain of 20 transformations we can modify a formula in the 19th transformation and recalculate only last two transformations. Or last 5, if we decide so. Or last 7. If transformations are a non-linear graph-like workflow, we can intelligently recalculate only necessary transformations, respecting dependencies.

|

| (click to zoom) |

Effectively, it enables an Excel-like experience, where transformations are recalculated automatically when one of them changes, similarly to Excel formulas that are re-evaluated when another formula or a cell value changes.

This creates a whole new experience of working with data -- reactive, exploratory, self-service data manipulation.

Resume: a new kind of tool for data

The columnar representation allows incremental transformation of compressed data, which in turn makes it possible to greatly reduce redundancy (typical for row-based ETL tools), and keep entire datasets in memory. This, in turn, speeds up calculations and enables reactive, interactive data exploration and data analysis capabilities.

Columnar in-memory ETL is basically a new kind of hybrid technology in which there is no distinct borderline between data transformation and data analysis. Instead of slicing and dicing a pre-calculated data model like OLAP tools do, we get the ability to explore data by transforming it on the fly. At the same time it does the traditional ETL job, typical for row-based ETL utilities.

The RAM limitation still remains though. It can be partially mitigated with a data partitioning strategy, where a big dataset is sliced into parts which then are processed in parallel in a map/reduce fashion. In the long term, the Moore's law is still effective for RAM prices which benefits in-memory data processing in general.

|

| Row-based vs Columnar In-memory ETL |

All-in-all, while processing billions of rows is still more appropriate for row-based ETL tools than for columnar ones, the latter represent a new paradigm of mixed data transformation and analysis, which makes it especially relevant now, when public interest to self-service data transformation is growing.

[1] You can take a look at an example of a columnar in-memory ETL tool here: http://easymorph.com.

Labels:

EasyMorph

June 13, 2016

EasyMorph 3.0: A combine for your data kitchen

EasyMorph v3.0 is about to be released, its beta version is already available for downloading. As the tool matures its product concept solidifies. Version 3.0 is a major milestone in this regard, because the long process of product re-positioning started last year is now complete, and a long-term vision has been formed. In this post I would like to explain a bit more what EasyMorph has morphed into (pun intended).

To put it simply, EasyMorph has become a "combine for data kitchen" (if you've never heard about the data kitchen concept check out this post). The analogy with kitchen combine is not a coincidence -- just as real-life kitchen combines, EasyMorph has several distinct functions that all utilize the same engine. In our case it's a reactive transformation-based calculation engine with four major functions built on top of it:

Data transformation

This is probably the most obvious function as people usually know EasyMorph as an ETL tool. In this role everything is more or less typical -- tabular data from databases and files is transformed using a set of configurable transformations. What's less typical is support for numbers and text in one column, non-relational transformations (e.g. creating column names from first N rows, or filling down empty cells), and the concept of iterations inspired by functional programming.

Data profiling and analysis

This function it usually less obvious because typically data profiling tools don't do transformations, but rather show some hard-coded statistics on data -- counts, uniqueness, distribution histograms, etc. QViewer is a typical example of such tool.

Data profiling with EasyMorph is different, because instead of using a fixed set of pre-defined hard-coded metrics you can calculate such metrics on the fly, and visualize them using drag-and-drop charts. While this approach sacrifices some simplicity (you might need, say, 3 clicks instead of 1 to calculate a metric) it enables much broader analysis and more precisely selected subsets of data thus providing way more flexibility than typical data profiling tools.

I can say that in my work I use EasyMorph for data analysis and profiling much more often that for ETL simply because new data transformations need to be designed once in a while (then they're just scheduled), but I do data analysis every day.

File conversion and mass operations with files

While file conversion is a rather obvious function (read a file in one format, write in another), the ability to conveniently perform mass manipulations with files (copying, renaming, archiving, uploading, etc.) is a surprising and underestimated function of EasyMorph. Really, who would expect that what supposedly is an ETL tool can be used for things like that? But since EasyMorph is a "data kitchen combine" rather than a typical ETL tool, this is exactly what it can be used for.

Since creating a list of files in a folder is as simple as dragging the folder into EasyMorph (recursive subfolder scanning is supported from version 3.0) you can get a list of, say, 40'000 files in 1000 folders in literally 5 seconds, sorted by size, creation time, folder and whatnot. Finding the biggest file? Just two clicks. Filter only spreadsheets? One more click. Exclude read-only? Another click.

Now add the capability of running an external application (or a Windows shell command) for each file (using iterations) with command line composed using a formula, and you get a perfect replacement for batch scripts to do mass renaming, copying, archiving, sending e-mails or anything else that can be done from the command line. And, just like batch scripts, EasyMorph projects themselves can be executed from the command line, so they can be triggered by a 3rd party application (e.g. scheduler).

EasyMorph has a number of workflow transformations, that actually don't transform anything but perform various actions like launching an external program, or even taking a pause for N seconds. Therefore, it's basically a visual workflow design tool with the capability of designing parallelized (e.g. parallel mass file conversion) and asynchronous processes.

Reporting

PDF reporting is the headline feature of version 3.0 and a new function of EasyMorph. The idea behind it was simple: sometimes a result of data analysis has to be shared, and PDF is the most universally used format for sharing documents. At this point, PDF reporting in EasyMorph is not meant to be pixel-perfect and its customization capabilities are rather limited. Instead, the accent was made on quickness of report creation in order to make it less time-consuming. We're testing the waters with this release, and the direction of future development will depend on feedback received from users.

Resume

While it might not count as a distinct function sometimes it's convenient to keep EasyMorph open just for ad hoc calculations, e.g. paste a list and find duplicates in it, or even dynamically generate parts of some script -- e.g. comma-separated lists of fields or values. As a "data kitchen combine" EasyMorph is not an application for a broad audience, but rather a professional tool for data analysts who work with data every day. And like with real-life kitchen combines some people use one function more often, some another. Pick yours.

To put it simply, EasyMorph has become a "combine for data kitchen" (if you've never heard about the data kitchen concept check out this post). The analogy with kitchen combine is not a coincidence -- just as real-life kitchen combines, EasyMorph has several distinct functions that all utilize the same engine. In our case it's a reactive transformation-based calculation engine with four major functions built on top of it:

- Data transformation

- Data profiling and analysis

- File conversion and mass operations with files

- Reporting

Data transformation

This is probably the most obvious function as people usually know EasyMorph as an ETL tool. In this role everything is more or less typical -- tabular data from databases and files is transformed using a set of configurable transformations. What's less typical is support for numbers and text in one column, non-relational transformations (e.g. creating column names from first N rows, or filling down empty cells), and the concept of iterations inspired by functional programming.

Data profiling and analysis

This function it usually less obvious because typically data profiling tools don't do transformations, but rather show some hard-coded statistics on data -- counts, uniqueness, distribution histograms, etc. QViewer is a typical example of such tool.

Data profiling with EasyMorph is different, because instead of using a fixed set of pre-defined hard-coded metrics you can calculate such metrics on the fly, and visualize them using drag-and-drop charts. While this approach sacrifices some simplicity (you might need, say, 3 clicks instead of 1 to calculate a metric) it enables much broader analysis and more precisely selected subsets of data thus providing way more flexibility than typical data profiling tools.

I can say that in my work I use EasyMorph for data analysis and profiling much more often that for ETL simply because new data transformations need to be designed once in a while (then they're just scheduled), but I do data analysis every day.

File conversion and mass operations with files

While file conversion is a rather obvious function (read a file in one format, write in another), the ability to conveniently perform mass manipulations with files (copying, renaming, archiving, uploading, etc.) is a surprising and underestimated function of EasyMorph. Really, who would expect that what supposedly is an ETL tool can be used for things like that? But since EasyMorph is a "data kitchen combine" rather than a typical ETL tool, this is exactly what it can be used for.

Since creating a list of files in a folder is as simple as dragging the folder into EasyMorph (recursive subfolder scanning is supported from version 3.0) you can get a list of, say, 40'000 files in 1000 folders in literally 5 seconds, sorted by size, creation time, folder and whatnot. Finding the biggest file? Just two clicks. Filter only spreadsheets? One more click. Exclude read-only? Another click.

Now add the capability of running an external application (or a Windows shell command) for each file (using iterations) with command line composed using a formula, and you get a perfect replacement for batch scripts to do mass renaming, copying, archiving, sending e-mails or anything else that can be done from the command line. And, just like batch scripts, EasyMorph projects themselves can be executed from the command line, so they can be triggered by a 3rd party application (e.g. scheduler).

EasyMorph has a number of workflow transformations, that actually don't transform anything but perform various actions like launching an external program, or even taking a pause for N seconds. Therefore, it's basically a visual workflow design tool with the capability of designing parallelized (e.g. parallel mass file conversion) and asynchronous processes.

Reporting

PDF reporting is the headline feature of version 3.0 and a new function of EasyMorph. The idea behind it was simple: sometimes a result of data analysis has to be shared, and PDF is the most universally used format for sharing documents. At this point, PDF reporting in EasyMorph is not meant to be pixel-perfect and its customization capabilities are rather limited. Instead, the accent was made on quickness of report creation in order to make it less time-consuming. We're testing the waters with this release, and the direction of future development will depend on feedback received from users.

Resume

While it might not count as a distinct function sometimes it's convenient to keep EasyMorph open just for ad hoc calculations, e.g. paste a list and find duplicates in it, or even dynamically generate parts of some script -- e.g. comma-separated lists of fields or values. As a "data kitchen combine" EasyMorph is not an application for a broad audience, but rather a professional tool for data analysts who work with data every day. And like with real-life kitchen combines some people use one function more often, some another. Pick yours.

Labels:

EasyMorph

May 15, 2016

A peek into future Business Intelligence with AI and stuff

What a future Business Intelligence can look like? Usually, I'm skeptical about "disruptive" ideas like natural language queries or automatically generated analytical narrations (although, I respect the research effort), but recently I saw something that for the first time looked really interesting, if you apply it to data analysis. I will tell what it is shortly, but first I have to explain my skepticism.

Typing in natural language queries won't work because it's no better than writing SQL queries. Syntax is surely different, but it still has to be learned. It doesn't provide the expected freedom, like SQL didn't. Besides unexpected syntax restrictions (which has to be learned by user), queries quickly become long and complicated. I played a bit with NLP (natural language processing) queries done in Prolog in my school years and have a bit of understanding of the complexities related to NLP.

This can be somewhat mitigated by voice input, however virtual assistants like Siri/Alexa/Cortana are built around canned responses so it won't work either, because analytical ad hoc queries tend to be very different, and they always have a context.

Now, here is the promising technology. It's called Viv and I highly recommend watching its demo (it's about 30 minutes):

Two things that make Viv different: self-generating queries and the the ability to use a context. This can potentially make voice-based interactive data analysis finally possible. Not only can a service like Viv answer queries, e.g. "How many new customers did we get since January", you should be able to make it actionable. How about setting up alerts, like this: "Let me know next time when monthly sales in the West region drop below 1mln. Do it until the end of this year"? Or, sharing "Send this report to Peter and Jane in Corporate Finance department". Such virtual data analyst can participate in meetings, answer spontaneous questions, send out meeting results -- all done by voice. Quite attractive, isn't it?

Data analysis is a favorable area for artificial intelligence because it has a relatively small "universe" where entities (customers, transactions, products, etc.) are not so numerous, and their relationships are well understood. If you ever tried to design or analyze a conceptual data warehouse model, then most probably you have a good picture of that "universe".

And it seems like right technology to operate with this "universe" might arrive soon.

Typing in natural language queries won't work because it's no better than writing SQL queries. Syntax is surely different, but it still has to be learned. It doesn't provide the expected freedom, like SQL didn't. Besides unexpected syntax restrictions (which has to be learned by user), queries quickly become long and complicated. I played a bit with NLP (natural language processing) queries done in Prolog in my school years and have a bit of understanding of the complexities related to NLP.

This can be somewhat mitigated by voice input, however virtual assistants like Siri/Alexa/Cortana are built around canned responses so it won't work either, because analytical ad hoc queries tend to be very different, and they always have a context.

Now, here is the promising technology. It's called Viv and I highly recommend watching its demo (it's about 30 minutes):

Two things that make Viv different: self-generating queries and the the ability to use a context. This can potentially make voice-based interactive data analysis finally possible. Not only can a service like Viv answer queries, e.g. "How many new customers did we get since January", you should be able to make it actionable. How about setting up alerts, like this: "Let me know next time when monthly sales in the West region drop below 1mln. Do it until the end of this year"? Or, sharing "Send this report to Peter and Jane in Corporate Finance department". Such virtual data analyst can participate in meetings, answer spontaneous questions, send out meeting results -- all done by voice. Quite attractive, isn't it?

Data analysis is a favorable area for artificial intelligence because it has a relatively small "universe" where entities (customers, transactions, products, etc.) are not so numerous, and their relationships are well understood. If you ever tried to design or analyze a conceptual data warehouse model, then most probably you have a good picture of that "universe".

And it seems like right technology to operate with this "universe" might arrive soon.

May 8, 2016

Hints and tips on using QViewer for inspecting resident tables in QlikView

Three years ago I wrote "How to look inside resident tables at any point of loading script". This technique proved to be quite successful and efficient, and was praised by many prominent QlikView developers since then.

This post is a round-up of some best practices of using QViewer for inspecting resident tables in QlikView, collected over the last 3 years:

Here is the most recent variant of the INSPECT subroutine:

SUB Inspect (T)

// let's add some fault tolerance

LET NR = NoOfRows('$(T)');

IF len('$(NR)')>0 THEN

// Table exists, let's view it

STORE $(T) into [$(QvWorkPath)\~$(T).qvd] (qvd);

EXECUTE "C:\<pathToQViewer>\QViewer.exe" "$(QvWorkPath)\~$(T).qvd";

EXECUTE cmd.exe /c del /q "$(QvWorkPath)\~$(T).qvd";

ELSE

//Table doesn't exist. Let's display a messagebox with a warning

_MsgBox:

LOAD MsgBox('Table $(T) doesn' & chr(39) & 't exist. Nothing to inspect.', 'Warning', 'OK', 'ICONEXCLAMATION') as X AutoGenerate 1;

Drop Table _MsgBox;

ENDIF

// Namespace cleanup

SET NR=;

ENDSUB

Installer of the next version of QViewer will be creating a registry key with path to QViewer, so the subroutine will be able to use the registry key to get location of qviewer.exe instead of hardcoded file path (kudos to Matthew Fryer for the suggestion).

INSPECT is quite helpful in verifying joins for correctness. For this, insert CALL INSPECT twice -- once before a join, and once after it. This will allow you to see whether the resulting table has more rows after the join than before, and check if the join actually appended anything, i.e. if appended columns actually have some data in them.

To find duplicates in a column -- double-click the column header for a listbox with unique values in that column, and then click Count in that list. On first click QViewer will sort values in descending order thus showing duplicate entries (which have counts > 1) at the top of the list. Checking a primary key for duplicates after a join can help detecting wrong joins.

To find duplicate rows in a table -- click "Morph It" to open the table in EasyMorph, and then apply "Keep Duplicates" transformation. You can also filter rows, if you apply "Filter" or "Filter by expression" transformation.

When you deal with wide tables that have many columns, you might need to find specific column. Press F5 to open Table Metadata, and then sort field names in alphabetical order. Another common use case for Table Metadata is checking whether columns have values of expected type. For instance if a column is expected to have only numeric values, its "Text count" should be 0.

To find a value in a column -- double-click the column header to open a list of unique values, then use the search field above the list. To locate the searched value in the main table, simply double-click the value in the list. Press F3 to find next match in the main table.

Currently, the search feature is somewhat obscured (as rightfully pointed by some users). We will be introducing a more convenient full table search in QViewer v2.3 coming out in June. Subscribe to our mailing list on easyqlik.com to get a notification when it happens.

This post is a round-up of some best practices of using QViewer for inspecting resident tables in QlikView, collected over the last 3 years:

Here is the most recent variant of the INSPECT subroutine:

SUB Inspect (T)

// let's add some fault tolerance

LET NR = NoOfRows('$(T)');

IF len('$(NR)')>0 THEN

// Table exists, let's view it

STORE $(T) into [$(QvWorkPath)\~$(T).qvd] (qvd);

EXECUTE "C:\<pathToQViewer>\QViewer.exe" "$(QvWorkPath)\~$(T).qvd";

EXECUTE cmd.exe /c del /q "$(QvWorkPath)\~$(T).qvd";

ELSE

//Table doesn't exist. Let's display a messagebox with a warning

_MsgBox:

LOAD MsgBox('Table $(T) doesn' & chr(39) & 't exist. Nothing to inspect.', 'Warning', 'OK', 'ICONEXCLAMATION') as X AutoGenerate 1;

Drop Table _MsgBox;

ENDIF

// Namespace cleanup

SET NR=;

ENDSUB

Installer of the next version of QViewer will be creating a registry key with path to QViewer, so the subroutine will be able to use the registry key to get location of qviewer.exe instead of hardcoded file path (kudos to Matthew Fryer for the suggestion).

INSPECT is quite helpful in verifying joins for correctness. For this, insert CALL INSPECT twice -- once before a join, and once after it. This will allow you to see whether the resulting table has more rows after the join than before, and check if the join actually appended anything, i.e. if appended columns actually have some data in them.

To find duplicates in a column -- double-click the column header for a listbox with unique values in that column, and then click Count in that list. On first click QViewer will sort values in descending order thus showing duplicate entries (which have counts > 1) at the top of the list. Checking a primary key for duplicates after a join can help detecting wrong joins.

To find duplicate rows in a table -- click "Morph It" to open the table in EasyMorph, and then apply "Keep Duplicates" transformation. You can also filter rows, if you apply "Filter" or "Filter by expression" transformation.

When you deal with wide tables that have many columns, you might need to find specific column. Press F5 to open Table Metadata, and then sort field names in alphabetical order. Another common use case for Table Metadata is checking whether columns have values of expected type. For instance if a column is expected to have only numeric values, its "Text count" should be 0.

To find a value in a column -- double-click the column header to open a list of unique values, then use the search field above the list. To locate the searched value in the main table, simply double-click the value in the list. Press F3 to find next match in the main table.

Currently, the search feature is somewhat obscured (as rightfully pointed by some users). We will be introducing a more convenient full table search in QViewer v2.3 coming out in June. Subscribe to our mailing list on easyqlik.com to get a notification when it happens.

May 1, 2016

Why I prototype Qlik apps in EasyMorph before creating them

If you want to create a Qlik app just create it, why would anyone build a prototype in another tool first? Isn't it just a waste of time? For simple cases -- probably yes, but for complex apps prototyping them first allows designing apps faster and more reliably. Here is why:

When developing Qlik apps with a complex transformation logic one of the main challenges is to deal with data quality and data structure of poorly documented source systems. Therefore the most time-consuming phase is figuring out how to process data correctly and what can potentially go wrong. There are many questions to answer during this phase, for instance:

This is where EasyMorph comes in handy. First, it loads data once, then keeps it in memory, therefore, it doesn't have to be reloaded every time. And if you load sample data only one time, why not use a bigger data set which is usually better for data profiling? Not only does EasyMorph load data only once, it also keeps in memory results of all successful transformations. So if an error occurs, you continue from where it stopped, not from the beginning -- another time-saving feature.

Second, EasyMorph runs transformations automatically in the background after any change. It's like if you are writing a Qlik script, and while you're writing it after any change or new statement Qlik runs the script proactively, without requiring you to press Reload. Except Qlik doesn't do it. Basically, transformations to EasyMorph is what formulas are to Excel -- you change one and immediately see a new result of calculations, regardless of how many formulas/transformations it took.

Third, designing a transformation process visually is much faster than scripting. Some Qlik developers are exceptionally good at writing scripts, but even they can't beat it when a whole transformation like aggregation is created literally in two clicks. If one knew exactly from the beginning what a script should do then writing it quickly would not be a problem. It's the numerous iterative edits, corrections and reloads that make writing Qlik scripts long. Once I have designed and debugged a transformation logic in EasyMorph, scripting it in Qlik is usually a matter of couple hours, and it typically works reliably and as expected from the 1st run.

Another important advantage of prototyping Qlik apps in EasyMorph is that it allows creating a reference result. When you design a Qlik application off an existing Excel or BI report it usually makes the task easier because numbers in the old report serve as a reference you can compare against. However, if you design a brand new report there might be no reference at all. How can you be sure that your Qlik script, expressions and sets work correctly? There is a whole lot of things that can go wrong. Building a prototype in EasyMorph gives you that reference point and not just for the script, but also for expressions, including set analysis. In airplanes, measuring crucial indicators like altitude and velocity must be done using at least two probes (for each metric) that utilize different principles of physics so that pilots can be sure it's measured correctly. The same principle here -- "get another reference point".

I also found that designing apps in close cooperation with business users is more productive when the users have good understanding of how a transformation logic works. It's better explained by letting them explore a visual process in EasyMorph rather than showing totally cryptic (for them) Qlik scripts.

Resume: EasyMorph is a professional tool which can be used by QlikView / Qlik Sense developers to create robust and reliable applications faster by prototyping them first. I do it myself, and so far it works pretty well.

When developing Qlik apps with a complex transformation logic one of the main challenges is to deal with data quality and data structure of poorly documented source systems. Therefore the most time-consuming phase is figuring out how to process data correctly and what can potentially go wrong. There are many questions to answer during this phase, for instance:

- How do we merge data -- what are the link fields, what fields are appended?

- Does any combination of the link fields have duplicates in one or the other table?

- Do the link fields have nulls?

- Are nulls actually nulls or empty text strings?

- Are numbers actually numbers, not text?

- Do text values have trailing spaces?

- After we join tables, does the result pass a sanity check?

- How can we detect it if the join goes wrong on another set of data (e.g. for another time period)?

- Are dates and amounts within expected ranges?

- Do dimensions have complete set of values, is anything missing?

- When dealing with data in spreadsheets

- Are text and numbers mixed in the same column? If yes, what is the rule to clean things up?

- Are column names and their positions consistent across spreadsheets? If not, how do we handle the inconsistency?

- Are sheet names consistent across spreadsheets?

This is where EasyMorph comes in handy. First, it loads data once, then keeps it in memory, therefore, it doesn't have to be reloaded every time. And if you load sample data only one time, why not use a bigger data set which is usually better for data profiling? Not only does EasyMorph load data only once, it also keeps in memory results of all successful transformations. So if an error occurs, you continue from where it stopped, not from the beginning -- another time-saving feature.

Second, EasyMorph runs transformations automatically in the background after any change. It's like if you are writing a Qlik script, and while you're writing it after any change or new statement Qlik runs the script proactively, without requiring you to press Reload. Except Qlik doesn't do it. Basically, transformations to EasyMorph is what formulas are to Excel -- you change one and immediately see a new result of calculations, regardless of how many formulas/transformations it took.

Third, designing a transformation process visually is much faster than scripting. Some Qlik developers are exceptionally good at writing scripts, but even they can't beat it when a whole transformation like aggregation is created literally in two clicks. If one knew exactly from the beginning what a script should do then writing it quickly would not be a problem. It's the numerous iterative edits, corrections and reloads that make writing Qlik scripts long. Once I have designed and debugged a transformation logic in EasyMorph, scripting it in Qlik is usually a matter of couple hours, and it typically works reliably and as expected from the 1st run.

Another important advantage of prototyping Qlik apps in EasyMorph is that it allows creating a reference result. When you design a Qlik application off an existing Excel or BI report it usually makes the task easier because numbers in the old report serve as a reference you can compare against. However, if you design a brand new report there might be no reference at all. How can you be sure that your Qlik script, expressions and sets work correctly? There is a whole lot of things that can go wrong. Building a prototype in EasyMorph gives you that reference point and not just for the script, but also for expressions, including set analysis. In airplanes, measuring crucial indicators like altitude and velocity must be done using at least two probes (for each metric) that utilize different principles of physics so that pilots can be sure it's measured correctly. The same principle here -- "get another reference point".

I also found that designing apps in close cooperation with business users is more productive when the users have good understanding of how a transformation logic works. It's better explained by letting them explore a visual process in EasyMorph rather than showing totally cryptic (for them) Qlik scripts.

Resume: EasyMorph is a professional tool which can be used by QlikView / Qlik Sense developers to create robust and reliable applications faster by prototyping them first. I do it myself, and so far it works pretty well.

Labels:

EasyMorph,

Qlik Sense,

QlikView

March 11, 2016

Thoughts on Tableau acquiring HyPer

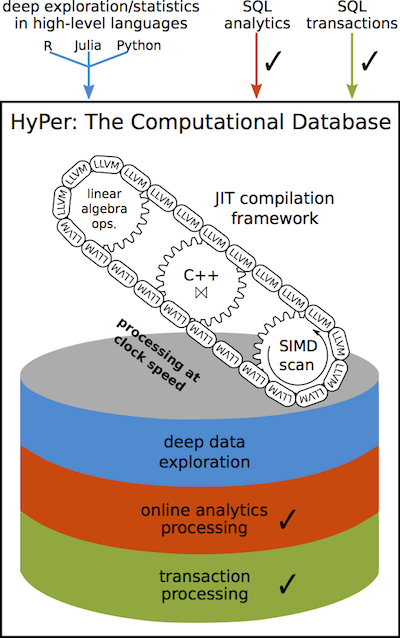

As it became known [1][2] today Tableau acquires HyPer -- a small German database company that created a high-speed in-memory hybrid OLTP/OLAP database engine. HyPer was founded by two university professors and has ten PhD students and alumni on board, four of which will be joining Tableau.

HyPer claims to have high performance in both transactional and analytical types of workloads, achievable even on ARM architectures. It uses many smart techniques like virtual memory snapshoting to run long and short queries on the same datasets, one-the-fly compilation of queries into low-level code, adaptive indexing, hot clustering for query parallelization and many others (see HyPer overview).

Does it mean that Tableau becomes a database company? Apparently no. First, because that's not what they do, and second, because HyPer is rather an academic technology research rather than a market-ready product.

To me this acquisition is very much like Qlik's acquisition of NComVa a few years ago. Let me explain it a bit:

NComVa was a small company that built interactive Javascript data visualizations. From what I understand Qlik Sense to some extent exploits the expertise acquired from NComVa. Qlik is very good at engineering highly optimized data engines, but academic data visualization and user experience is hardly can be counted as their core competence (I'll write a separate post on it). So Qlik needed some "brain injection" that led to birth of Qlik Sense.

With Tableau the situation is opposite -- their competence in data visualization and usability is outstanding, however high-performance in-memory data processing has never been a strong point in Tableau's agenda -- the idea was to piggyback existing relational DBMSes. To remind you, Tableau only recently switched to a 64-bit architecture and introduced multi-threaded query execution for their in-memory engine.

Therefore, the acquisition of HyPer is a long needed "brain injection" of top-notch data processing expertise. And it may change things significantly for Tableau customers, competitors and Tableau themselves.

I would suggest that in 1-2 years (not earlier) Tableau will introduce something like a super-cache -- the ability to hold big amounts of data (up to 1 TB or more) in memory, query it instantly with sub-second response times, and update in real-time.

Interesting questions are: whether it will require data modelling, how data will be loaded, and whether it will scale horizontally. The latter question is the most interesting, because Qlik, the closest Tableau's competitor, doesn't scale horizontally meaning that a single dataset can't be split across several nodes that are queried in parallel. HyPer hints at distributed data processing, so it could be possible that the "super-cache" will scale horizontally, which can be a big deal.

All in all, the acquisition is an intriguing twist of story. It will be interesting to see how it unfolds.

[1] http://www.tableau.com/about/press-releases/2016/tableau-acquires-hyper

[2] http://www.tableau.com/about/blog/2016/3/welcome-hyper-team-tableau-community-51375

HyPer claims to have high performance in both transactional and analytical types of workloads, achievable even on ARM architectures. It uses many smart techniques like virtual memory snapshoting to run long and short queries on the same datasets, one-the-fly compilation of queries into low-level code, adaptive indexing, hot clustering for query parallelization and many others (see HyPer overview).

Does it mean that Tableau becomes a database company? Apparently no. First, because that's not what they do, and second, because HyPer is rather an academic technology research rather than a market-ready product.

To me this acquisition is very much like Qlik's acquisition of NComVa a few years ago. Let me explain it a bit:

NComVa was a small company that built interactive Javascript data visualizations. From what I understand Qlik Sense to some extent exploits the expertise acquired from NComVa. Qlik is very good at engineering highly optimized data engines, but academic data visualization and user experience is hardly can be counted as their core competence (I'll write a separate post on it). So Qlik needed some "brain injection" that led to birth of Qlik Sense.

With Tableau the situation is opposite -- their competence in data visualization and usability is outstanding, however high-performance in-memory data processing has never been a strong point in Tableau's agenda -- the idea was to piggyback existing relational DBMSes. To remind you, Tableau only recently switched to a 64-bit architecture and introduced multi-threaded query execution for their in-memory engine.

Therefore, the acquisition of HyPer is a long needed "brain injection" of top-notch data processing expertise. And it may change things significantly for Tableau customers, competitors and Tableau themselves.

I would suggest that in 1-2 years (not earlier) Tableau will introduce something like a super-cache -- the ability to hold big amounts of data (up to 1 TB or more) in memory, query it instantly with sub-second response times, and update in real-time.

Interesting questions are: whether it will require data modelling, how data will be loaded, and whether it will scale horizontally. The latter question is the most interesting, because Qlik, the closest Tableau's competitor, doesn't scale horizontally meaning that a single dataset can't be split across several nodes that are queried in parallel. HyPer hints at distributed data processing, so it could be possible that the "super-cache" will scale horizontally, which can be a big deal.

All in all, the acquisition is an intriguing twist of story. It will be interesting to see how it unfolds.

[1] http://www.tableau.com/about/press-releases/2016/tableau-acquires-hyper

[2] http://www.tableau.com/about/blog/2016/3/welcome-hyper-team-tableau-community-51375

Labels:

Qlik,

Qlik Sense,

Tableau

March 3, 2016

Are BI/ETL vendors ready for "data kitchens"? Because users are

If you've been in the BI/ETL industry for several years you may remember that many years ago BI/ETL vendors actively promoted the concept of so called "BI standardization". Gartner, Forrester and other market analysts also talked about it -- organizations should stop having "zoo parks of systems" and standardize on one platform. At that time even the big BI vendors were only transitioning from a single-tool client-server architecture to a multiple-tool web-based one and many hoped that once they complete the transition organizations would be able to cover their data analysis needs with a comprehensive product set (platform) from one vendor. These expectations were driven by high cost and complexity of the analytical systems at that time, so standardizing on one platform would facilitate building in-house expertise, lower maintenance costs, and simplify support and administration.

However, the reality turned out to be more complex. As a matter of fact it became clear that no vendor can offer really comprehensive product suite that would satisfy data analysis hunger of various types of users. The more users became involved into data analysis the more diverse and sophisticated needs they developed.

It seems to me that organizations are increasingly becoming ready to embrace the concept of "data kitchen" where users have a choice from many tools so that they can choose whether to use a "spoon", "fork", or "knife" for a job, rather than having just a "spoon" for all cases. However, the problem is that the vendors are not ready -- they still want customers to buy their expensive cumbersome enterprise platforms.

So what would be the difference between a "data kitchen tool" and an "old-school tool":

I guess the table above is self-explanatory. I would only make a couple notes:

Usability was long ignored but now it's the king. First, because data analysis is difficult, therefore I believe that software vendors should go the extra mile to design well thought out, clean and polished UI. Enterprise software should be smarter and simpler, even at a cost of removing some functionality (look at some popular mobile apps). Second, when you have many tools in your "kitchen" you can't afford spending a lot of time figuring out how to use each of them. A single tool may not require too much attention. Selfish ones don't survive in a team. Hence the necessity of open data formats and APIs. Open metadata is required for throughout data governance -- a must-have for a "data kitchen".

Another note is about price. Cost structure per user will change. If previously an organization could spend $5,000 for one license for one user, one should not expect that because of the "data kitchen" organizations will start buying 10 tools for the same $5K each spending in total $50K per user. Instead they will be looking to offer a user 10 tools for $500 each. I believe those software vendors that resist the change and keep prices high will be eventually squeezed out of the market.

You can check your favorite software against the table above. Some products are better suited to find a place in a "data kitchen", some are not. In my opinion Tableau is a good example of well thought out and polished user experience aimed for self-service use. I wish only they opened TDE and/or adopted some open format for data exchange. I hope EasyMorph can become another good example of a tool that is perfectly suitable for the "data kitchen" concept. We're living in an interesting time after all -- the BI/ETL market stagnated for long time, but now the pendulum has swung in the opposite direction and we can observe many interesting products coming to the market.

Isn't it great?

However, the reality turned out to be more complex. As a matter of fact it became clear that no vendor can offer really comprehensive product suite that would satisfy data analysis hunger of various types of users. The more users became involved into data analysis the more diverse and sophisticated needs they developed.

It seems to me that organizations are increasingly becoming ready to embrace the concept of "data kitchen" where users have a choice from many tools so that they can choose whether to use a "spoon", "fork", or "knife" for a job, rather than having just a "spoon" for all cases. However, the problem is that the vendors are not ready -- they still want customers to buy their expensive cumbersome enterprise platforms.

So what would be the difference between a "data kitchen tool" and an "old-school tool":

|

| Click to zoom |

Usability was long ignored but now it's the king. First, because data analysis is difficult, therefore I believe that software vendors should go the extra mile to design well thought out, clean and polished UI. Enterprise software should be smarter and simpler, even at a cost of removing some functionality (look at some popular mobile apps). Second, when you have many tools in your "kitchen" you can't afford spending a lot of time figuring out how to use each of them. A single tool may not require too much attention. Selfish ones don't survive in a team. Hence the necessity of open data formats and APIs. Open metadata is required for throughout data governance -- a must-have for a "data kitchen".

Another note is about price. Cost structure per user will change. If previously an organization could spend $5,000 for one license for one user, one should not expect that because of the "data kitchen" organizations will start buying 10 tools for the same $5K each spending in total $50K per user. Instead they will be looking to offer a user 10 tools for $500 each. I believe those software vendors that resist the change and keep prices high will be eventually squeezed out of the market.

You can check your favorite software against the table above. Some products are better suited to find a place in a "data kitchen", some are not. In my opinion Tableau is a good example of well thought out and polished user experience aimed for self-service use. I wish only they opened TDE and/or adopted some open format for data exchange. I hope EasyMorph can become another good example of a tool that is perfectly suitable for the "data kitchen" concept. We're living in an interesting time after all -- the BI/ETL market stagnated for long time, but now the pendulum has swung in the opposite direction and we can observe many interesting products coming to the market.

Isn't it great?

January 30, 2016

The long tail of the information explosion

You have probably heard a lot about Big Data and everything related to it. However, Big Data is only one side of the explosive growth of digital information which we have been observing. The other side is often overlooked, while it can have no less disruptive influence on traditional BI/DWH landscape than Big Data.

The information explosion (it's a lame term but I'll stick to it in this post for simplicity) is usually perceived as and associated with rapidly growing size of data sets (transactional and semi-structured) up to the point where organizing it and querying it using traditional technologies becomes very inefficient or too costly.

Although the other side of the story here is amount of data sets. Let me illustrate it:

|

| (click to zoom) |

Not only are data sets growing in volume -- they also are growing in number. New data sets are spawning with exponential rate. For every new system with large data volume there are tens of small data sets in spreadsheets, text files, web-pages and whatnot. That's why I call it The Long Tail -- these are myriads of small data sources, many of which are human-generated rather then machine-generated. What used to be a single number somewhere in email is becoming a list of numbers. What used to be a list is becoming a table. What used to be a table is becoming a data mart. These new data sets are relatively small, but there are lots of them and this represents a number of challenges:

First, building a single data warehouse is becoming less and less relevant because by the time when you have designed a data model and ETL processes to upload a new data set into a data warehouse there are two more new data sets and your data warehouse is obsolete and incomplete again. By the time when you upload these two there will be another four. Therefore, responsibility for data transformation should be more and more often given to business users (hint: EasyMorph can help with it).

Second, complexity of traditional ETL tools is becoming an obstacle because they were designed for relatively small number of transformation steps on large volumes of data, assuming that a lot of business logic will be handled by source systems. However, the growing number of new data sources requires designing exponentially more transformations in less amount of time with increasing share of embedded business logic.