As it became known [1][2] today Tableau acquires HyPer -- a small German database company that created a high-speed in-memory hybrid OLTP/OLAP database engine. HyPer was founded by two university professors and has ten PhD students and alumni on board, four of which will be joining Tableau.

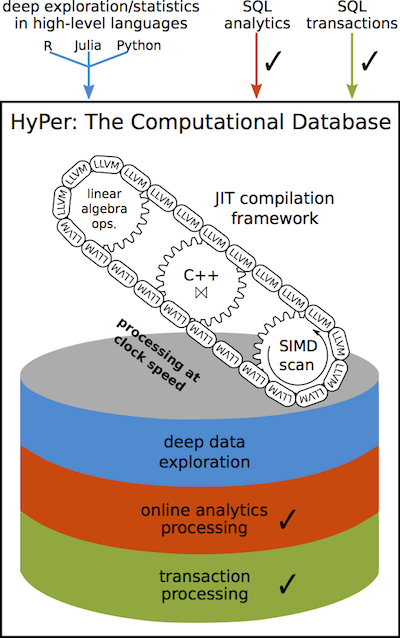

HyPer claims to have high performance in both transactional and analytical types of workloads, achievable even on ARM architectures. It uses many smart techniques like virtual memory snapshoting to run long and short queries on the same datasets, one-the-fly compilation of queries into low-level code, adaptive indexing, hot clustering for query parallelization and many others (see HyPer overview).

Does it mean that Tableau becomes a database company? Apparently no. First, because that's not what they do, and second, because HyPer is rather an academic technology research rather than a market-ready product.

To me this acquisition is very much like Qlik's acquisition of NComVa a few years ago. Let me explain it a bit:

NComVa was a small company that built interactive Javascript data visualizations. From what I understand Qlik Sense to some extent exploits the expertise acquired from NComVa. Qlik is very good at engineering highly optimized data engines, but academic data visualization and user experience is hardly can be counted as their core competence (I'll write a separate post on it). So Qlik needed some "brain injection" that led to birth of Qlik Sense.

With Tableau the situation is opposite -- their competence in data visualization and usability is outstanding, however high-performance in-memory data processing has never been a strong point in Tableau's agenda -- the idea was to piggyback existing relational DBMSes. To remind you, Tableau only recently switched to a 64-bit architecture and introduced multi-threaded query execution for their in-memory engine.

Therefore, the acquisition of HyPer is a long needed "brain injection" of top-notch data processing expertise. And it may change things significantly for Tableau customers, competitors and Tableau themselves.

I would suggest that in 1-2 years (not earlier) Tableau will introduce something like a super-cache -- the ability to hold big amounts of data (up to 1 TB or more) in memory, query it instantly with sub-second response times, and update in real-time.

Interesting questions are: whether it will require data modelling, how data will be loaded, and whether it will scale horizontally. The latter question is the most interesting, because Qlik, the closest Tableau's competitor, doesn't scale horizontally meaning that a single dataset can't be split across several nodes that are queried in parallel. HyPer hints at distributed data processing, so it could be possible that the "super-cache" will scale horizontally, which can be a big deal.

All in all, the acquisition is an intriguing twist of story. It will be interesting to see how it unfolds.

[1] http://www.tableau.com/about/press-releases/2016/tableau-acquires-hyper

[2] http://www.tableau.com/about/blog/2016/3/welcome-hyper-team-tableau-community-51375

March 11, 2016

March 3, 2016

Are BI/ETL vendors ready for "data kitchens"? Because users are

If you've been in the BI/ETL industry for several years you may remember that many years ago BI/ETL vendors actively promoted the concept of so called "BI standardization". Gartner, Forrester and other market analysts also talked about it -- organizations should stop having "zoo parks of systems" and standardize on one platform. At that time even the big BI vendors were only transitioning from a single-tool client-server architecture to a multiple-tool web-based one and many hoped that once they complete the transition organizations would be able to cover their data analysis needs with a comprehensive product set (platform) from one vendor. These expectations were driven by high cost and complexity of the analytical systems at that time, so standardizing on one platform would facilitate building in-house expertise, lower maintenance costs, and simplify support and administration.

However, the reality turned out to be more complex. As a matter of fact it became clear that no vendor can offer really comprehensive product suite that would satisfy data analysis hunger of various types of users. The more users became involved into data analysis the more diverse and sophisticated needs they developed.

It seems to me that organizations are increasingly becoming ready to embrace the concept of "data kitchen" where users have a choice from many tools so that they can choose whether to use a "spoon", "fork", or "knife" for a job, rather than having just a "spoon" for all cases. However, the problem is that the vendors are not ready -- they still want customers to buy their expensive cumbersome enterprise platforms.

So what would be the difference between a "data kitchen tool" and an "old-school tool":

I guess the table above is self-explanatory. I would only make a couple notes:

Usability was long ignored but now it's the king. First, because data analysis is difficult, therefore I believe that software vendors should go the extra mile to design well thought out, clean and polished UI. Enterprise software should be smarter and simpler, even at a cost of removing some functionality (look at some popular mobile apps). Second, when you have many tools in your "kitchen" you can't afford spending a lot of time figuring out how to use each of them. A single tool may not require too much attention. Selfish ones don't survive in a team. Hence the necessity of open data formats and APIs. Open metadata is required for throughout data governance -- a must-have for a "data kitchen".

Another note is about price. Cost structure per user will change. If previously an organization could spend $5,000 for one license for one user, one should not expect that because of the "data kitchen" organizations will start buying 10 tools for the same $5K each spending in total $50K per user. Instead they will be looking to offer a user 10 tools for $500 each. I believe those software vendors that resist the change and keep prices high will be eventually squeezed out of the market.

You can check your favorite software against the table above. Some products are better suited to find a place in a "data kitchen", some are not. In my opinion Tableau is a good example of well thought out and polished user experience aimed for self-service use. I wish only they opened TDE and/or adopted some open format for data exchange. I hope EasyMorph can become another good example of a tool that is perfectly suitable for the "data kitchen" concept. We're living in an interesting time after all -- the BI/ETL market stagnated for long time, but now the pendulum has swung in the opposite direction and we can observe many interesting products coming to the market.

Isn't it great?

However, the reality turned out to be more complex. As a matter of fact it became clear that no vendor can offer really comprehensive product suite that would satisfy data analysis hunger of various types of users. The more users became involved into data analysis the more diverse and sophisticated needs they developed.

It seems to me that organizations are increasingly becoming ready to embrace the concept of "data kitchen" where users have a choice from many tools so that they can choose whether to use a "spoon", "fork", or "knife" for a job, rather than having just a "spoon" for all cases. However, the problem is that the vendors are not ready -- they still want customers to buy their expensive cumbersome enterprise platforms.

So what would be the difference between a "data kitchen tool" and an "old-school tool":

|

| Click to zoom |

Usability was long ignored but now it's the king. First, because data analysis is difficult, therefore I believe that software vendors should go the extra mile to design well thought out, clean and polished UI. Enterprise software should be smarter and simpler, even at a cost of removing some functionality (look at some popular mobile apps). Second, when you have many tools in your "kitchen" you can't afford spending a lot of time figuring out how to use each of them. A single tool may not require too much attention. Selfish ones don't survive in a team. Hence the necessity of open data formats and APIs. Open metadata is required for throughout data governance -- a must-have for a "data kitchen".

Another note is about price. Cost structure per user will change. If previously an organization could spend $5,000 for one license for one user, one should not expect that because of the "data kitchen" organizations will start buying 10 tools for the same $5K each spending in total $50K per user. Instead they will be looking to offer a user 10 tools for $500 each. I believe those software vendors that resist the change and keep prices high will be eventually squeezed out of the market.

You can check your favorite software against the table above. Some products are better suited to find a place in a "data kitchen", some are not. In my opinion Tableau is a good example of well thought out and polished user experience aimed for self-service use. I wish only they opened TDE and/or adopted some open format for data exchange. I hope EasyMorph can become another good example of a tool that is perfectly suitable for the "data kitchen" concept. We're living in an interesting time after all -- the BI/ETL market stagnated for long time, but now the pendulum has swung in the opposite direction and we can observe many interesting products coming to the market.

Isn't it great?

Subscribe to:

Posts (Atom)