|

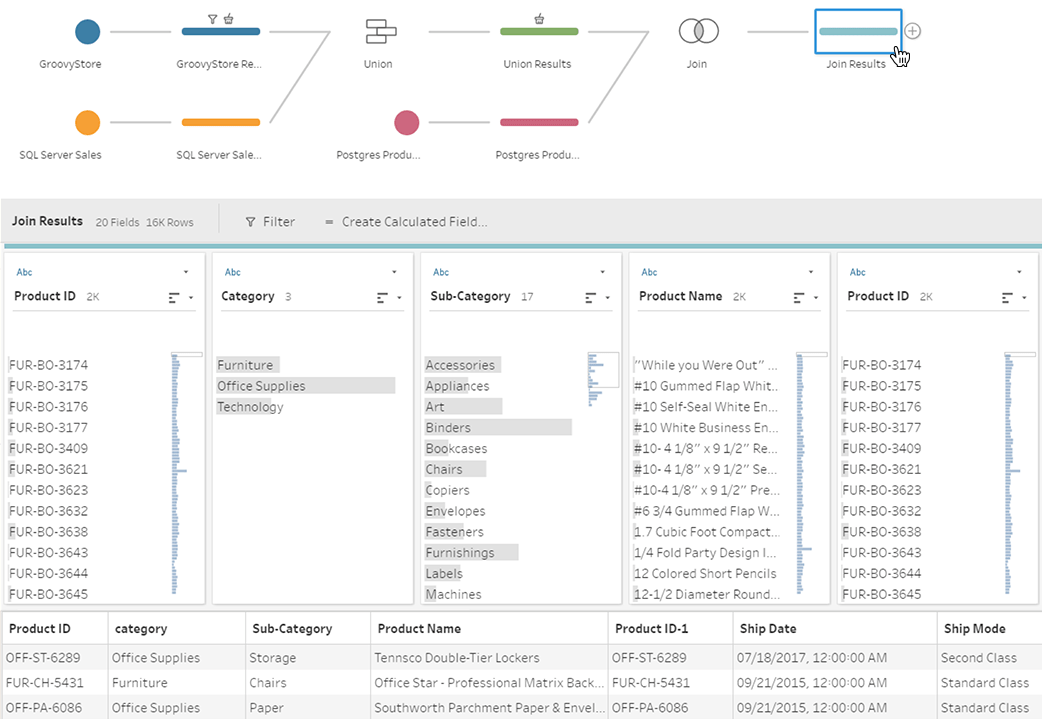

| Tableau Maestro. This screenshot belongs to tableau.com |

Before I go further let me classify the existing data transformation offerings:

Personal Data Preparation Tools

These are rather simple applications that allow performing basic operations such as cleansing, filtering, merging in a linear and non-parameterized way. While they're visual and target non-technical audience their applicability is usually pretty limited as they don't support non-linear workflows (a must have for anything non-trivial), have no means of automation and integration (e.g. running external applications) and have a limited set of available transforms. On the positive side, they're usually reasonably priced and easy to start with.

Departmental Data Transformation (ETL) Applications

Applications in this category are full-featured, rather capable ETL programs that allow designing non-linear workflows (that typically look like a block diagram where inputs and outputs of blocks are connected with arrows), integrate with external applications, and run parameterized tasks on schedule. They are way more capable than the personal data prep tool described above, while still remaining rather affordable. However the vast majority of them have one big flaw that renders them barely useful for Tableau audience -- they are too IT/DBA/SQL-centric and therefore simply not suitable for an average Tableau user. Unless s/he wants to dive into topics such as the nuances of differences between CHAR, VARCHAR and NVARCHAR data types on a daily basis (hint: it's not much fun).

EasyMorph, the data transformation tool I've designed and produced, technically also belongs to the Departmental ETL category. However unlike most ETL tools, it's designed from the ground up for non-technical users first of all, which required walking away from the traditional approach to ETL and re-thinking data transformation from scratch.

Enterprise ETL platforms

These are mastodons. In terms of features, scale, and of course, price. Big guns with a big price tag. Most of them are also heavily IT-centric, however some Enterprise ETL platforms (e.g. Alteryx and Lavastorm) have managed to become closer to non-technical users than the rest of the group. The exorbitant cost of licenses in this category severely restricts number of people that can use it for self-service data transformation within an organization. Especially, taking into account that in many cases they are used for departmental (and even personal) ETL, not enterprise, which is clearly overkill. After all, having 75-80% of revenue reinvested into sales and marketing allows hiring very skilled sales people :)

Now, where does Maestro fit in this classification? While it's still in beta, and no final product has been demonstrated yet I probably wouldn't be terribly off base if I assume that Maestro is a personal data preparation tool (probably with a non-linear workflow). Which means that Maestro, once released, would leave very little room for 3rd party software vendors in this category, especially if offered for free. Many will have simply to leave the market.

OK, what about EasyMorph then? I believe Maestro is a good thing for EasyMorph. While some our potential users might not realize at first that the two tools are in different categories, the introduction of Maestro actually makes EasyMorph a big favor:

1. It proves that good data analysis requires good data preparation. Tableau developers are incredibly creative people. It never ceases to amaze me what kinds of hacks and workarounds they use in Tableau in order to bring the source data into necessary shape. However, in many cases a decent non-linear data transformation tool would make this task straight forward, maintainable and debuggable.

2. It introduces the idea of a dedicated data transformation tool for wide audience. When pitching EasyMorph to various organizations I noticed that the idea of a specialized data transformation tool is not familiar to the non-technical audience. Developers understand the idea of a dedicated ETL tool and the benefits such a tool can provide. But business users (who comprise the biggest part of the Tableau user base) usually have hard times understanding the whole idea of visual data transformation. Maestro solves this task for us. With the power of Tableau's marketing :)

Someone said, that it's Apple and Steve Jobs who have taught smartphone users to buy apps and music instead of pirating it from somewhere. Apple's AppStore and iTunes have changed the mindset. I believe that Maestro will discover to many Tableau fans the convenience and power of visual self-service data preparation.

3. It makes it easier for us to explain to Tableau audience what EasyMorph is. Now it's plain simple: "EasyMorph is Maestro on steroids". The more people will use Maestro, the more people will buy into the benefits and convenience of visual programming (yes, Ladies and Gentlemen, it's visual programming), so that EasyMorph would be a logical next step for Maestro users once the complexity of required calculations grows beyond trivial.

PS. It's interesting to see that the "data kitchen" concept that I wrote about almost 2 years ago has been materializing more and more.